IARC7 Update June 13 2017

The University of Pittsburgh’s IARC Mission 7 team is glad to announce that we have graduated from version 0.1 to v1.0 of our hardware stack. With only a few weeks remaining until the Mission date, we are in the midst of our final hardware and software tests.

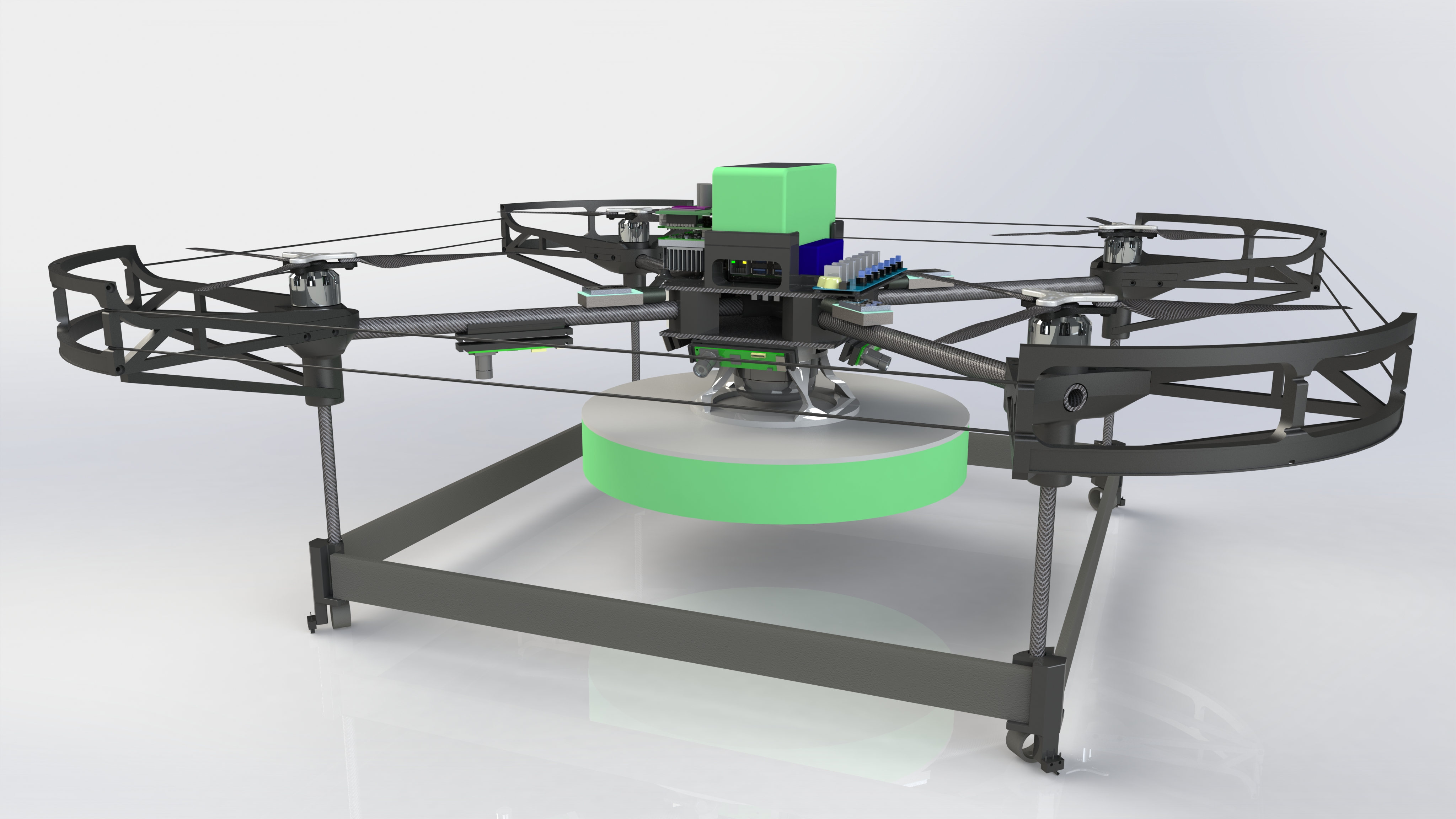

Changes to the quadrotor include stronger motors, carbon fiber propellers and structural rods, a Jetson TX2 computer, and a multitude of cameras and sensors.

The v1.0 update did not come without some challenges, and some details of our inital plans for v1.0 were modified as new information came to light.

Initial testing proved that v1.0 is capable of autonomous take off, landing, and height holding. Autonomous translation tests and roomba tracking tests are scheduled to be completed by the hardware stack soon.

IARC7 Article

For in depth information concerning the methodology of v1.0’s construction and software design, the publication presented by the University of Pittsburgh Robotics and Automation Society for IARC Mission 7 can be accessed here.

Mechanical

Version 1.0 replaces the aluminum parts of v0.1 with carbon fiber supports to reduce weight without sacrificing mechanical strength, whereas parts that are designed to break in crash scenarios are 3D printed with ABS plastic to maximize the speed of manufacturing replacement parts.

By designing v1.0 in such a way, it is less risky to test the hardware stack as replacement parts can be printed rapidly and cheaply.

Propellers

The plastic propellers used for v0.1 have been upgraded to KDE Direct 12.5x4.3(L) triple blade props. These props proved to be a good choice for us, as they do not need to be balanced, and their carbon fiber material is significantly lighter than plastic propellers of the same size.

Propeller Guards

The propeller guards on v1.0 have been slightly enlarged compared to v0.1, as the newer carbon fiber propellers are simply too large for the old model. v1.0’s propeller guards also feature new structural supports to enhance their rigidity.

Electronics

Safety circuit

The safety circuit has been revamped to tolerate higher loads across its power MOSFETs. the IRL40B209 was selected based on its low Rds on, high Vds and Id tolerances, and its low gate turn on voltage.

Battery

The v1.0 quadrotor has two onboard batteries; one for flight, and one the other for sensors and computers. The battery supplying power to the motors is 8000mAh 21.2V, and is a significant payload in terms of weight. The flight controller communicates with the Jetson computer in order to determine the battery’s voltage, and to initiate proper shutdown routines if the voltage is too low.

Power Delivery

In order to properly connect USB devices connected to the Jetson, a USB 3.0 hub was used (The Jetson has a single USB 3.0 port). This hub had several issues with power, and connecting several devices at once produced noticeable voltage sag. To mitigate this problem, several capacitors were connected to the hub’s power terminals.

Sensors

To measure distance from the ground, a LIDAR lite sensors was used to provide accurate height readings up to six meters. A time of flight sensor was utilized to give the Jetson information on how long it has been airborne.Four long throw bump switches were attached to the legs of the quad in order to detect when the robot has touched a surface.

Cameras

Initially we planned to use five e-con Systems See3CAM USB 3.0 cameras for computer vision, but found that the cameras were intermittent, and were not suitable for use with the Jetson TX1. Our solution was to use the Logitech c920 and two Microsoft LifeCams, which have great compatibility with GNU/Linux. A Raspberry Pi 3 Model B to reduce the CPU load of decoding compressed video on the Jetson. The Pi had ROS installed on it, which allows for simple communication with the Jetson via LAN.

Software

All of our developed software is freely licensed under the GPLv2, and is available to fork on GitHub.

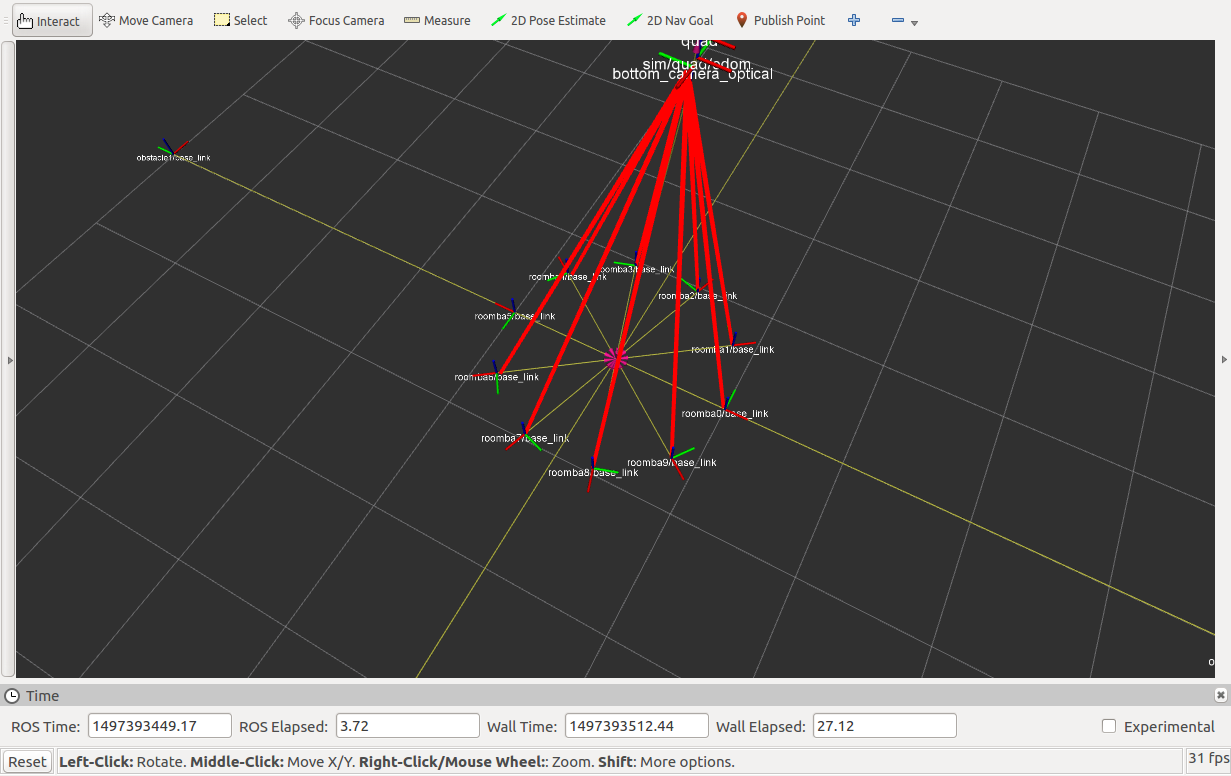

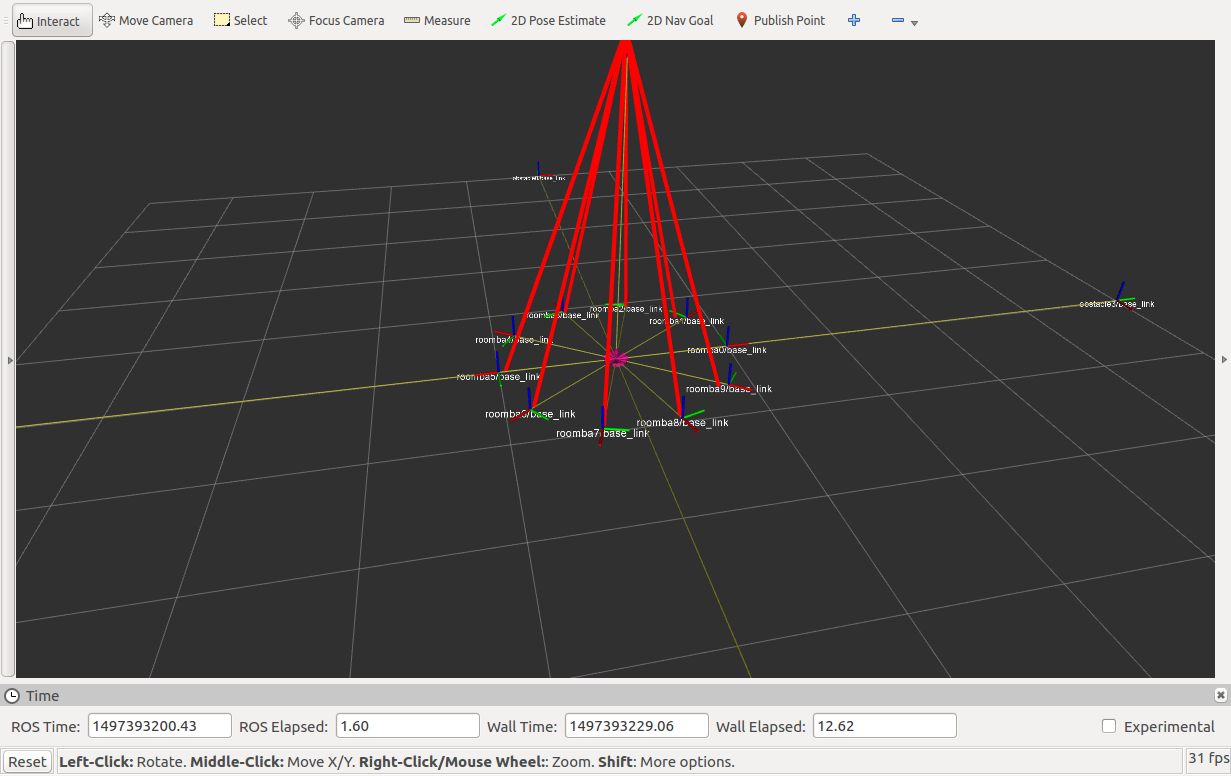

Simulator

The MORSE simulator currently supports several ROS tasks including taking off and landing, Roomba tracking, and waypoint translation. Tests to improve the computer’s vision, and Roomba tracking are being integrated

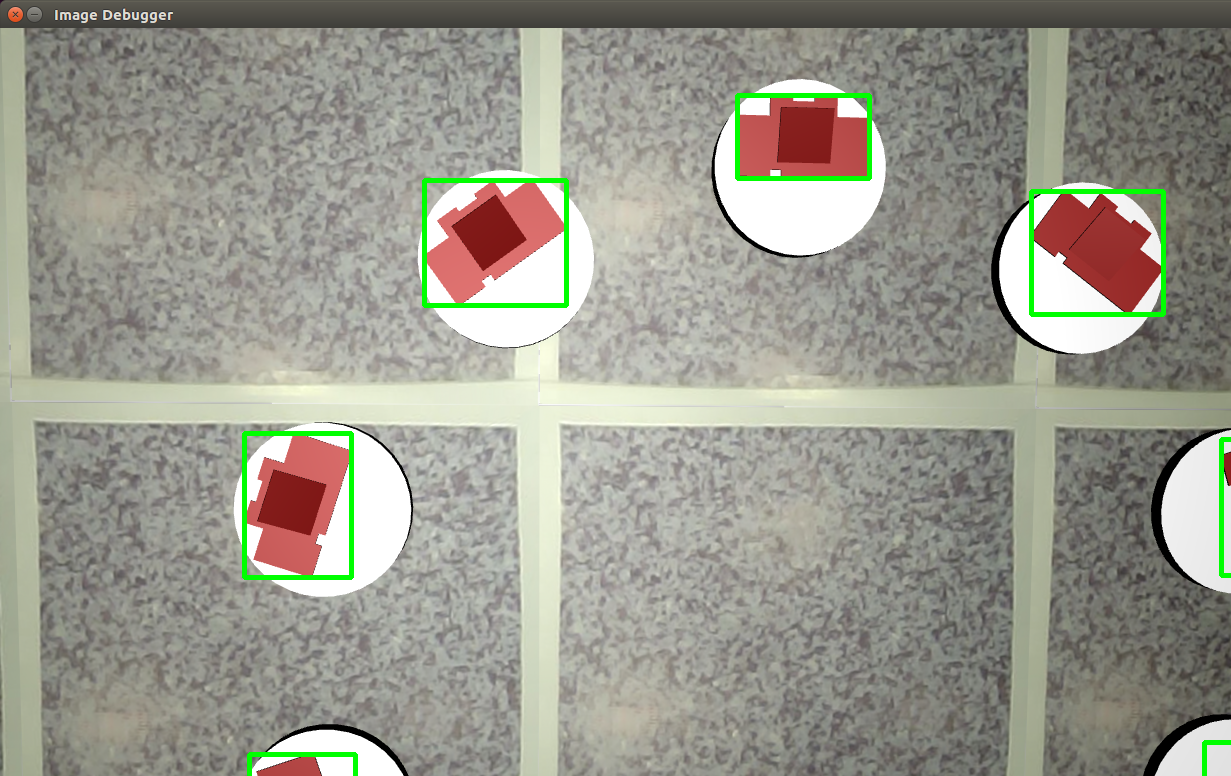

Computer Vision

A simple algorithm to find blobs of colored pixels to detect Roombas was added.

This algorithm uses HSV color filtering to eliminate most of the image, edge detection

to find the roomba color blobs, bounding box calculation around the detected edges, and

finally rejection of the boxes that are too small to be a Roomba.

A simple algorithm to find blobs of colored pixels to detect Roombas was added.

This algorithm uses HSV color filtering to eliminate most of the image, edge detection

to find the roomba color blobs, bounding box calculation around the detected edges, and

finally rejection of the boxes that are too small to be a Roomba.

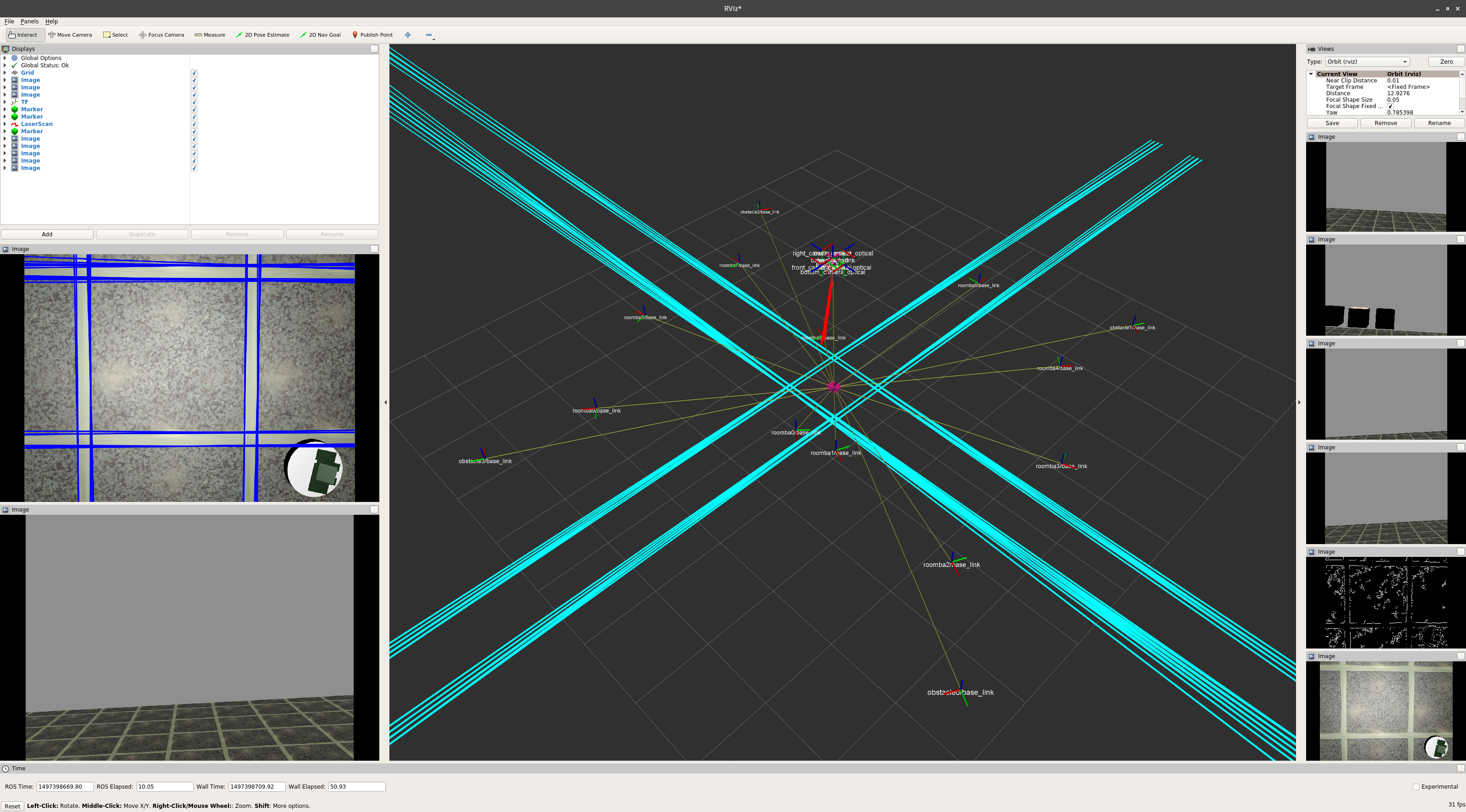

Shown below is the Roomba detection algorithm working in tandem with line detection

for robot localization.

GStreamer Pipeline

In order to obtain proper mJPEG video for OpenCV to work with, the video from the webcams had to be converted from l420 to RGBA colorspace. This conversion proved to be fairly taxing on the CPU, so the Jetson’s NVMM memory was leveraged to improve the conversion rate. Initially there was some difficulty transferring images from NVMM memory to system memory using GStreamer, but some investigation and help from Nvidia developers led to our solution available here.